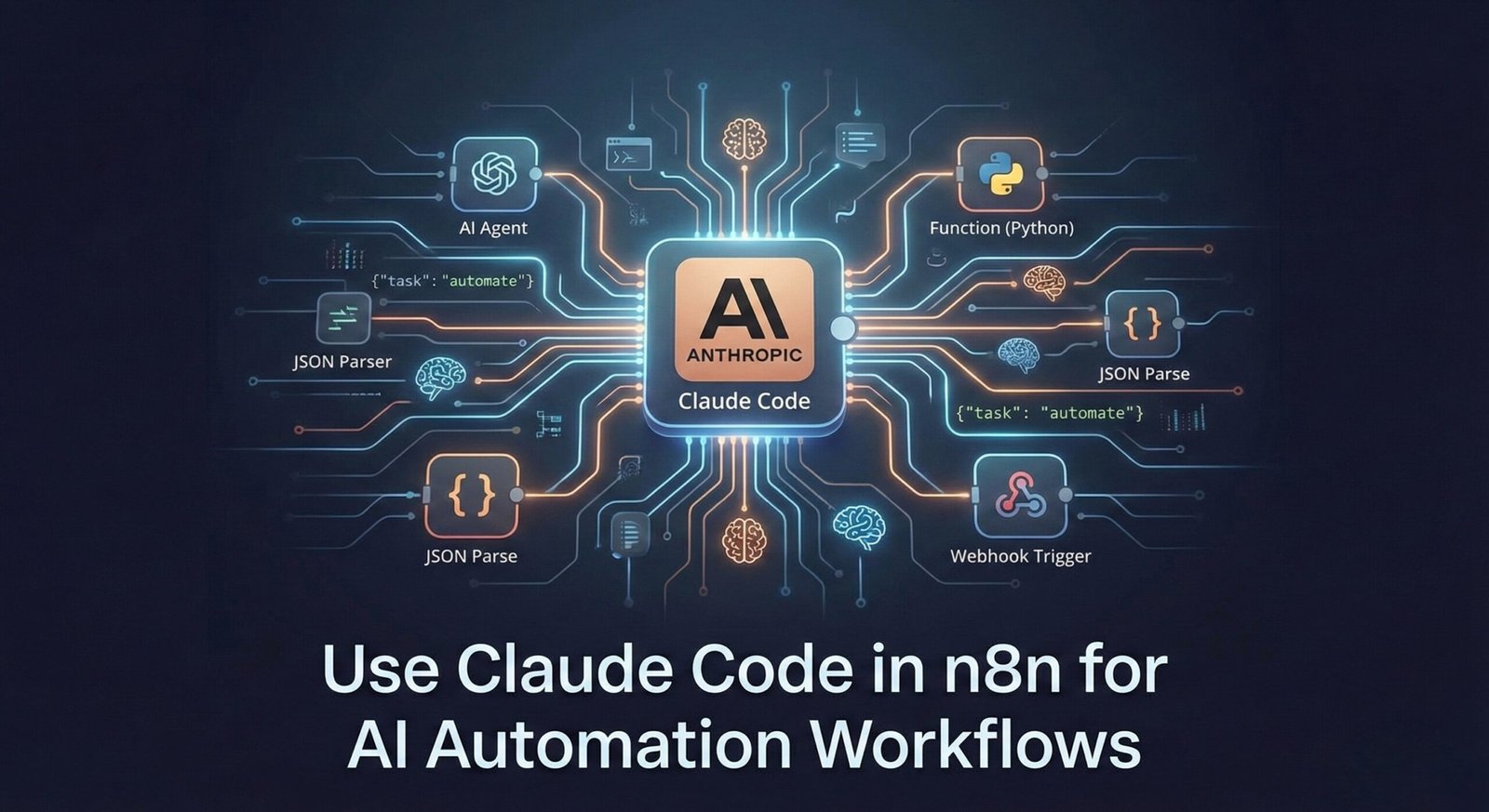

Use Claude Code in n8n for AI Automation Workflows

You know the stare. It happens usually around 2 PM on a Tuesday. You have the Function Node open in n8n. The upstream API just sent you a payload that looks like it was formatted by a toddler who hates JSON. It’s nested three layers deep, the keys are inconsistent, and you need to extract one specific ID to pass to your CRM.

You start typing const data = items[0].json... and then you stop. You open a new tab. You search for regex patterns. You sigh. This is the bottleneck. This is where automation stops feeling like magic and starts feeling like manual labor.

For the longest time, n8n was about connecting pipes. You get water from pipe A, send it to pipe B. But if the water turned into ice halfway through, your pipes burst. You had to write the logic to handle that state change yourself.

That changed when we started putting Claude Code in n8n.

I’m not talking about just asking a chatbot to write a poem about your data. I’m talking about injecting a brain directly into your workflow plumbing. We are moving past the era of rigid if/else statements and entering a phase where the workflow understands what you want, even if you ask for it poorly.

We aren’t just building automations anymore. We are architecting systems that can think.

Handing the Keys to the Ferrari

Let’s get the setup out of the way because you can’t drive if you don’t have the keys.

To get this working, you need to connect Anthropic to your n8n instance. Why Anthropic? Why not just stick with the other guys? Because of Anthropic Claude 3.5 Sonnet.

In the coding world, models have personalities. Some are creative; they make great marketing copy. Others are strict. Claude 3.5 Sonnet is the developer’s model. It understands syntax, structure, and logic better than it understands creative flair. When you ask it to return JSON, it returns JSON. It doesn’t give you a preamble about how happy it is to help you. It just does the work.

That precision is what makes n8n AI automation workflows actually reliable enough for production.

You’ll want to grab your API key from the Anthropic console. In n8n, you have two main ways to use this power: the standard AI Agent node or the HTTP Request node if you want to get bare metal with the API. Stick to the Agent node for now. It handles the memory and context management so you don’t have to write a history buffer from scratch.

A quick note on settings: Do not skimp on max_tokens. I see people setting this to 200 to save pennies. Don’t do that. Give the model room to think. If you cut off its internal monologue, you cut off its reasoning capabilities.

The Plumbing Meets the Poet

Here is the biggest lie in automation: “Data is structured.”

It never is. You hook up a webhook to an email parser, and suddenly your “structured” lead data is a wall of text because the prospect replied from an iPhone and their signature got mixed in with the message body.

The old way to fix this was painful. You’d write fifty lines of JavaScript. You’d try to split the string by newlines, look for keywords like “Phone:”, and hope nobody made a typo. It was brittle. One change in the input format and your entire workflow crashed.

This is where JSON parsing with AI changes the game.

Instead of writing code to parse the text, you use Claude to extract the intent of the text. You pass the messy email body into the node and give it a prompt.

“Extract the user’s phone number and sentiment. Return it as strict JSON.”

That’s it.

I remember the first time I replaced a complex regex block with a single prompt. I was staring at this nasty invoice scraping workflow I’d built. It broke every week. I deleted the script. I dropped in the AI node. I pasted the prompt.

It worked.

It handled the PDF that was scanned upside down. It handled the invoice where the total was written in handwriting. It just worked. It feels like cheating.

But you have to be careful. You can’t just trust it blindly.

// The Prompt

"Analyze the input text. If it contains a bug report, return specific JSON schema { 'type': 'bug', 'severity': 'high' }. Do not explain. Just JSON."Notice the “Do not explain” part. That is crucial. You are piping this output into another node. If Claude adds “Here is your JSON:” before the actual bracket, your next node will choke. You need to be strict.

When the Robot Writes the Script

There is a level above just processing text. This is where we get into automate code generation.

Sometimes, using an AI node for every single item in a loop is too slow. Or too expensive. If you are processing 50,000 rows of a CSV, you don’t want to pay for 50,000 API calls just to format a date.

This is where you flip the script. You don’t ask Claude to do the work. You ask Claude to write the code that does the work.

You go to the Function (Code) node in n8n. You describe what you need in plain English inside the AI assistant tab (or copy-paste from Claude’s web interface).

“Write a JavaScript function for n8n that filters this array, keeps only items where the ‘status’ is ‘active’, and converts the ‘date’ field to YYYY-MM-DD.”

Claude spits out the JavaScript or Python. You review it. Maybe you even paste it into your favorite Python IDE to debug it first, just to be safe. Then, you hit run.

Now, the code runs locally on your machine or server. It’s free. It’s instant. You used the intelligence to build the tool, but you aren’t paying for intelligence every time you use the tool. This balances the low-code vs code-heavy debate. You aren’t avoiding code; you’re just not typing it yourself.

Does it always work? No.

Sometimes it hallucinates a method that doesn’t exist in the n8n environment. Sometimes it forgets that n8n uses a specific items array structure. You hit run, and you get red text.

That’s fine. You paste the error back to Claude. “You messed up the array mapping.” It apologizes and fixes it. It’s a conversation. It’s messy. But it is still ten times faster than looking up the documentation for the DateTime library.

Hallucinations and Handbrakes

Let’s talk about the danger.

AI lies. It doesn’t mean to, but it does. If you ask it to extract an order ID and there isn’t one, it might just invent one that looks plausible because it wants to complete the pattern.

If you put that into production without guardrails, you are going to have a bad time. You might end up shipping a pallet of goods to a customer named “Null Undefined”.

You need error handling in AI workflows.

Think of the AI model like a brilliant intern who is occasionally drunk. They are capable of genius work, but you need to check their math.

The best way to do this in n8n is using Structured Output Parsers within the chain, or simply verifying the data in the next step.

I always add a conditional node right after the AI. Does the output contain the fields I asked for? Is the confidence score high enough? If not, don’t fail silently. Route it to a manual review channel (like Slack or Teams).

“Hey human, the robot is confused. Please check this one.”

This “Trust but Verify” loop is the difference between a toy project and a business process. You need a handbrake.

The Agent that Never Sleeps

Linear workflows are safe. Step A leads to Step B which leads to Step C.

But real problems aren’t linear. They are loopy.

This is where the n8n AI Agent node shines. This is different from a standard completion. An agent has access to tools. You give it a toolbox: a calculator, a Google Search node, a database connector, and an n8n webhook integration.

You tell the Agent: “Manage the inventory.”

That’s the prompt.

The Agent looks at the stock. It sees the stock is low. It decides to use the Database Tool to check recent sales velocity. It sees sales are high. It decides to use the Email Tool to alert the supplier.

You didn’t program that path. You just gave it the goal and the tools.

This is where Claude Code in n8n becomes terrifyingly powerful. The logic isn’t in the lines connecting the nodes; the logic is in the prompt. The workflow decides its own path based on the data it sees.

It requires a different mindset. You are giving up control. You have to be okay with that. You have to test it, watch it, and refine the prompt until the Agent behaves the way you want.

The Last Line of Defense

We are at a point where the barrier to entry for building complex, robust software is dissolving.

It used to be that if you couldn’t write the syntax, you couldn’t build the thing. You were locked out. Now, the syntax is cheap. The code is just a detail.

Your job is shifting. You aren’t the bricklayer anymore. You are the architect. You need to understand the flow of data, the logic of the business, and the safeguards needed to keep it running.

The Claude Code in n8n integration is just one example of this shift, but it’s a potent one. It allows you to build things in an afternoon that used to take a development team a week.

So don’t just stare at that blinking cursor. Throw a prompt at it. See what comes back. Break it. Fix it.

The machine is ready to work. You just have to tell it what to do.

Go break something.

Share this content:

Post Comment